Talk, Draw, Generate: 10 Ways for Creating Software with AI

From AI-assisted development to Vibe Coding and CHOP: Exploring the emerging terminology that defines how we collaborate with AI to write code

Hey there! AI is changing how we make software, I’m seeing lots of new terms pop up left and right. Let me break down the main ways people are talking about working with AI to write code these days. The IT industry is really not great in coining meaningful terms (microservices, serverless, noSQL), and I feel with AI it will only getting worse (vibe coding?). Which terms are here to stay, only time will tell. Let’s see the 10 terms used for AI based code creation today.

1. AI-Assisted Development

AI-Assisted Development: leveraging AI tools to support developers in planning, writing, testing, and debugging code. Humans maintain primary responsibility while AI provides autocomplete suggestions, answers questions, explains code, and automates repetitive tasks.

This approach enhances rather than replaces traditional workflows. Referenced in numerous resources including the book AI-Assisted Programming which I reviewed in the past. Probably the most widely used term out there.

2. AI-Native Development

AI-Native Dev: embeds AI deeply into every phase of the software development lifecycle. Similar to "Cloud-Native" for cloud computing, AI becomes foundational rather than supplemental, with software emerging almost as a by-product of requirements and specifications.

Popularized by Guy Podjarny and Simon Maple, hosts of the AI Native Dev Podcast, explore (in this video specifically) how AI fundamentally reshapes development as a whole.

3. Chat-Oriented Programming (CHOP)

Chat-Oriented Programming emphasis the coding through iterative conversations with AI (chatting), refining prompts to achieve desired outputs. This method enables rapid prototyping without deep syntax knowledge, creating an accessible coding experience based on natural language dialogue.

Steve Yegge coined this term in his The Death of the Junior Developer, highlighting how conversational interfaces transform coding workflows.

4. Vibe Coding 🎧

Vibe Coding uses natural language to convey intent—like "build me a sleek login page"—letting AI handle implementation details. It prioritizes overall feel over precise specifications, making it ideal for quick prototyping though typically requiring refinement for production.

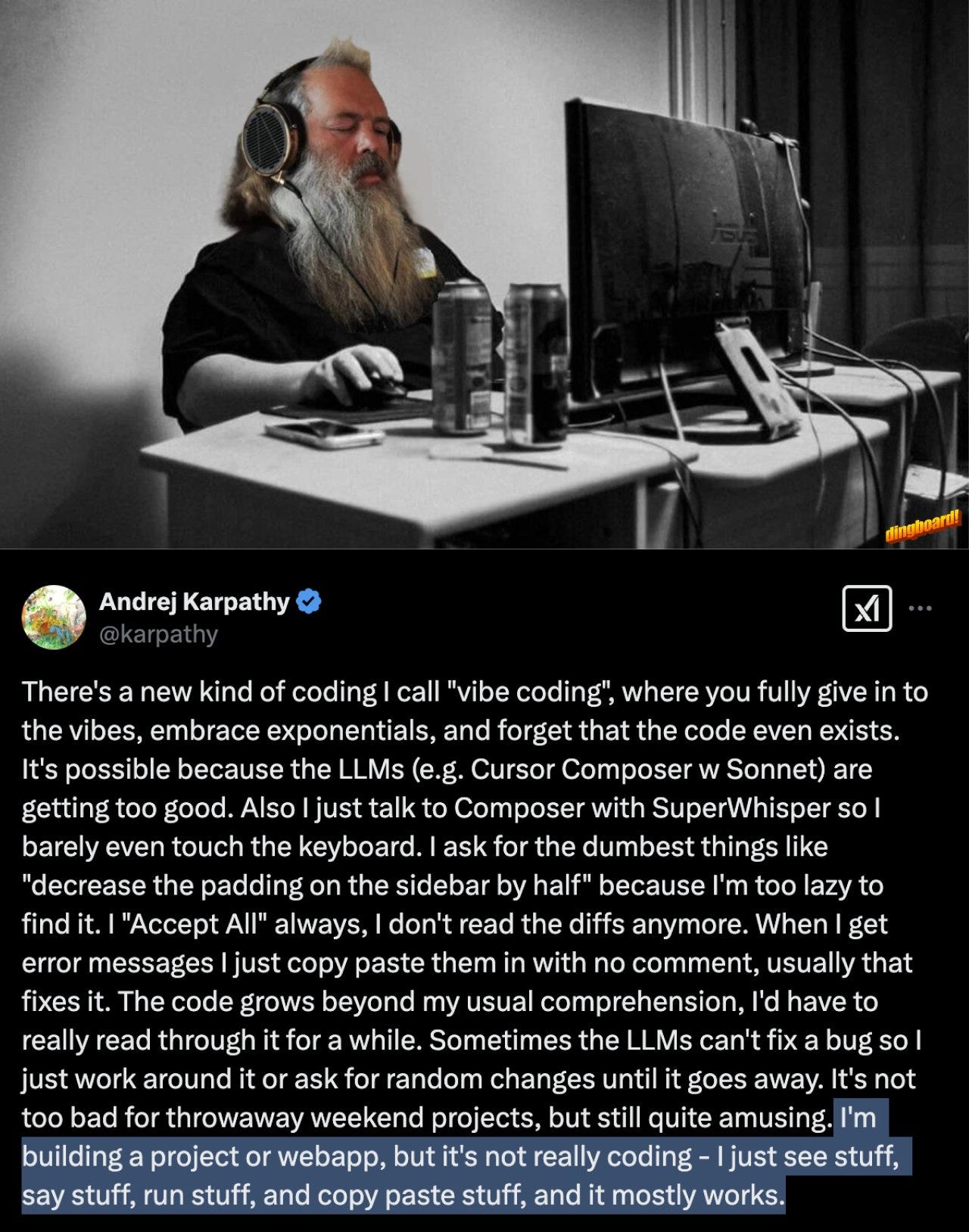

Vibe coding emerged

Andrej Karpathy, former Director of AI at Tesla and OpenAI founding member, coined this term in an X post that sparked widespread discussion. The rest is history.

5. Prompt-Driven Development

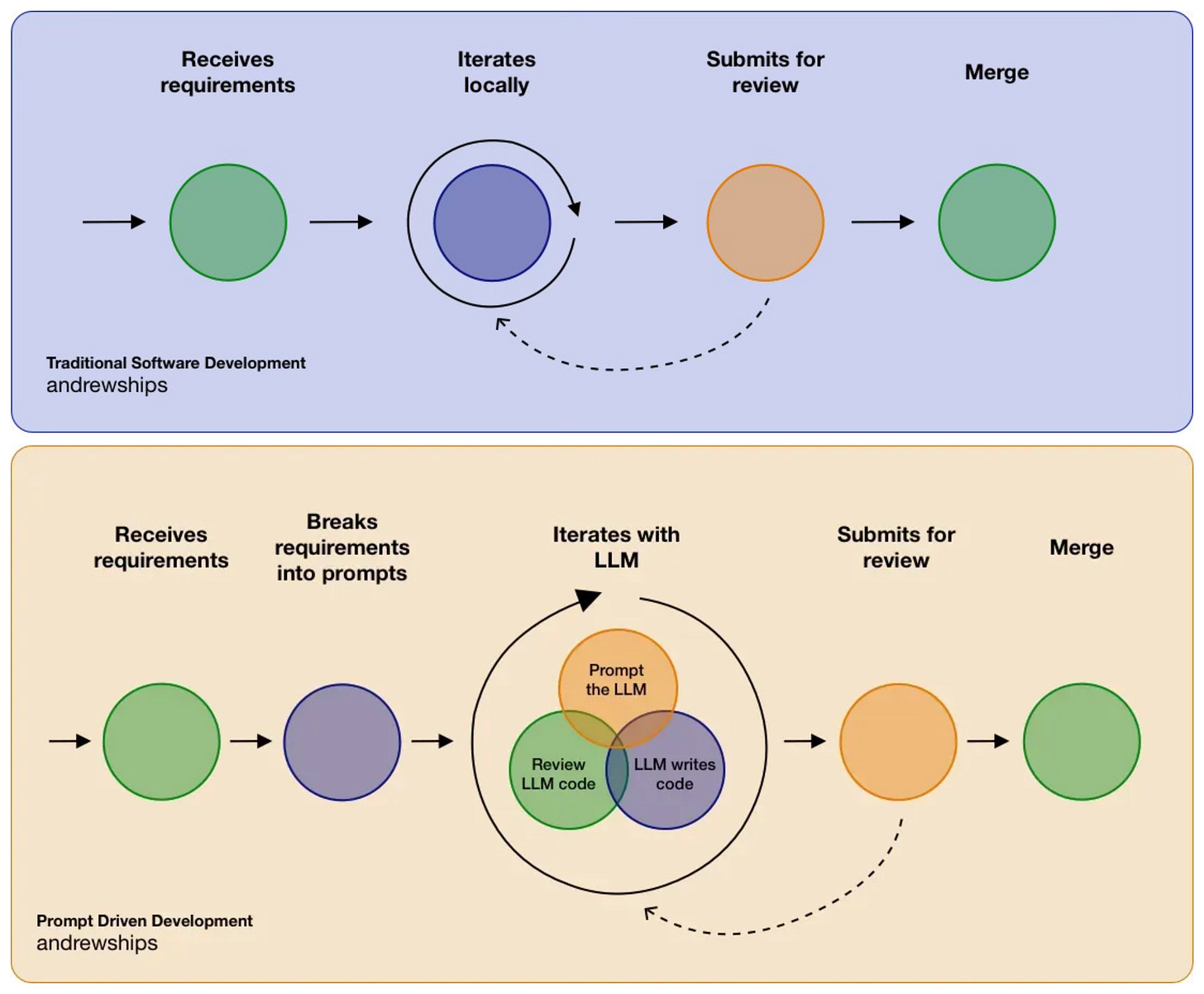

Prompt-Driven Development describe the crafting precise text prompts to guide AI in generating code and documentation. It is like Test-Driven Development but… with prompts. Developers become "prompt engineers," focusing on constructing effective instructions rather than writing implementation details directly.

@Andrew Miller has done a comprehensive write up with a fresh perspective on integrating prompts into development practices

6. Generative Programming

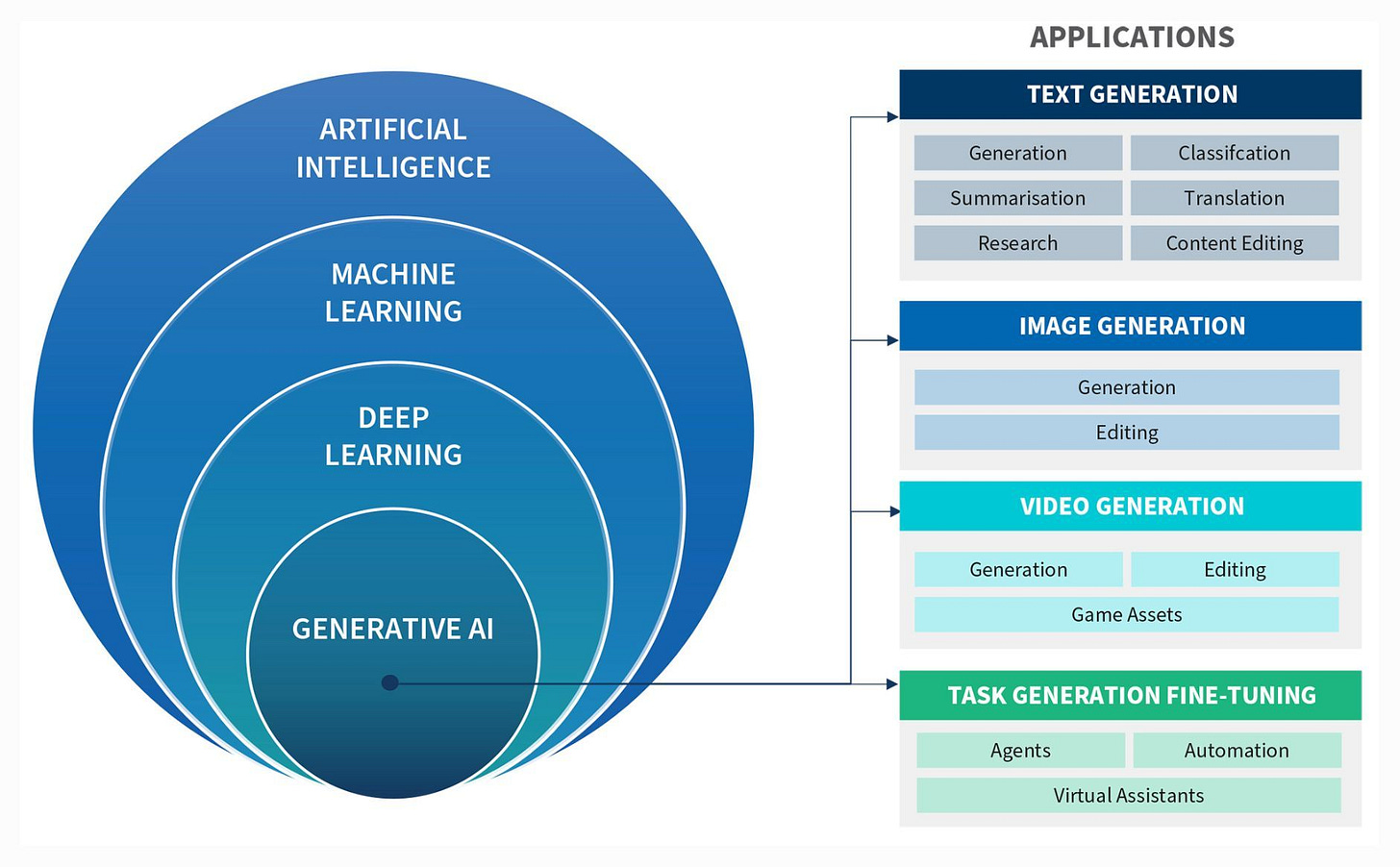

The big shift is in how code gets made - it's generated by AI, not written by hand. It is not any AI, but a specific branch of AI - GenAI that enabled this new transformation.

While other terms focus on techniques (chat, acceptance test) or tools (AI assisted), Generative Programming highlights the core revolution: code is generated regardless of the triggers: text prompts, chat conversations, images, diagrams, or voice commands.

There can be many models and techniques, all kind of poking means: text, diagrams, voice... multiple back and forth, but the enabler (GenAI) and the fact that the code is generated, are here to stay. Hence the name of this newsletter GenerativeProgrammer.

7. Speech-to-Code

Speech-to-Code: considering that humans speak faster than type, it is a no brainer to transform verbal instructions into code. This approach focuses on high-level spoken prompts while AI handles syntax details, creating a fluid development process with accessibility benefits for developers with physical limitations.

Addy Osmani explores this concept in his article "Speech-to-Code: Vibe Coding with Voice", demonstrating how combining voice commands with modern AI coding editors creates a more natural programming workflow.

8. Pseudocode

SudoLang for example is a DSL-like/pseudocode programming language designed specifically to collaborate with AI models like ChatGPT, Claude, and Llama, enabling developers to define behaviors using natural language constraints.

Instead of rigid prompts, it allows describing what you want—like "ensure the app runs smoothly on low bandwidth"—with features like interfaces, semantic pattern matching, and Mermaid diagram integration. A middle ground between a programming language and a free-form prompt.

9. Specification-Driven AI Development

This approach combines established acceptance testing methodologies with AI's code generation capabilities. By writing clear test-driven specifications first, developers can harness AI's creative power while maintaining precision and alignment with business requirements. We already know how to do this.

🧠 Dave Farley (co-author of Continuous Delivery ) argues in his Acceptance Testing Is the FUTURE of Programming video that this combination provides the necessary guardrails for AI-generated code. And I agree with him fully.

10. Diagram-Driven Development

Diagram-Driven Development uses AI's multi-modal capabilities to generate software directly from visual representations. This builds on traditional diagram-driven design but adds automatic code generation, allowing developers to express complex systems visually and receive working implementations.

This technique is featured in tools like tldraw's "Make it Real" feature and Vercel's v0.dev, which can generate complete UI implementations from sketches or screenshots, or provision AWS services from a digram.

11. Conversational Programming

Conversational Programming, as described by Simon Wardley, is a shift from traditional development methods where code is written line-by-line using formal syntax, towards an interactive, dialogue-driven approach. Instead of rigidly defining every aspect of a program upfront, developers engage in a back-and-forth "conversation" with an AI, scripting assistant, or intelligent system that understands intent and refines code iteratively.

Unlike traditional development, which requires strict adherence to syntax and manual structuring of logic, conversational programming operates at a higher level of abstraction, allowing developers to describe functionality in natural language. This approach allows programmers to focus more on what they want to achieve rather than the exact steps needed to implement it, making development more intuitive and adaptive.

12. Agentic Coding

Agentic Coding describes delegating entire coding tasks to AI agents that act more like collaborators than tools. Anthropic used the term to describe Claude Code as an “agentic coding” tool that plans, asks clarifying questions, and builds code in steps—taking initiative rather than just following prompts.

Which AI Coding Approach and Term Will Survive the Hype Cycle?

AI-assisted development—and to a lesser extent, AI-native development, PDD, and generative programming—is becoming common, but the term is too broad and covers the entire software lifecycle. Approaches using pseudocode or voice commands are still niche.

Vibe Coding has gained attention as a term in AI-assisted development, but it mainly applies to early-stage coding: prototyping, frontend experiments, solo projects, and hobbyist software. It resonates with new developers starting with AI-first tools.

Experienced developers working on backend systems, distributed architectures, and core infrastructure tend to take a more structured approach in order to write long-lived and maintainable software. They will integrate AI’s generative capabilities with established engineering practices like acceptance testing(or its AI-native incarnation).

Diagram-Driven Development can enable rapid project initiation by transforming a new design sketch into working code through AI-powered code generation.

There terms are not necessarily overlapping, and more than one of them will remain. Have you come across other terms describing how developers work with AI? Follow the discussion by signing up the Generative Programmer newsletter or hit me on X.