7 Steps to Make Your OSS Project AI-Ready

A maintainer’s guide to enabling seamless onboarding, contribution, and collaboration for humans and AI.

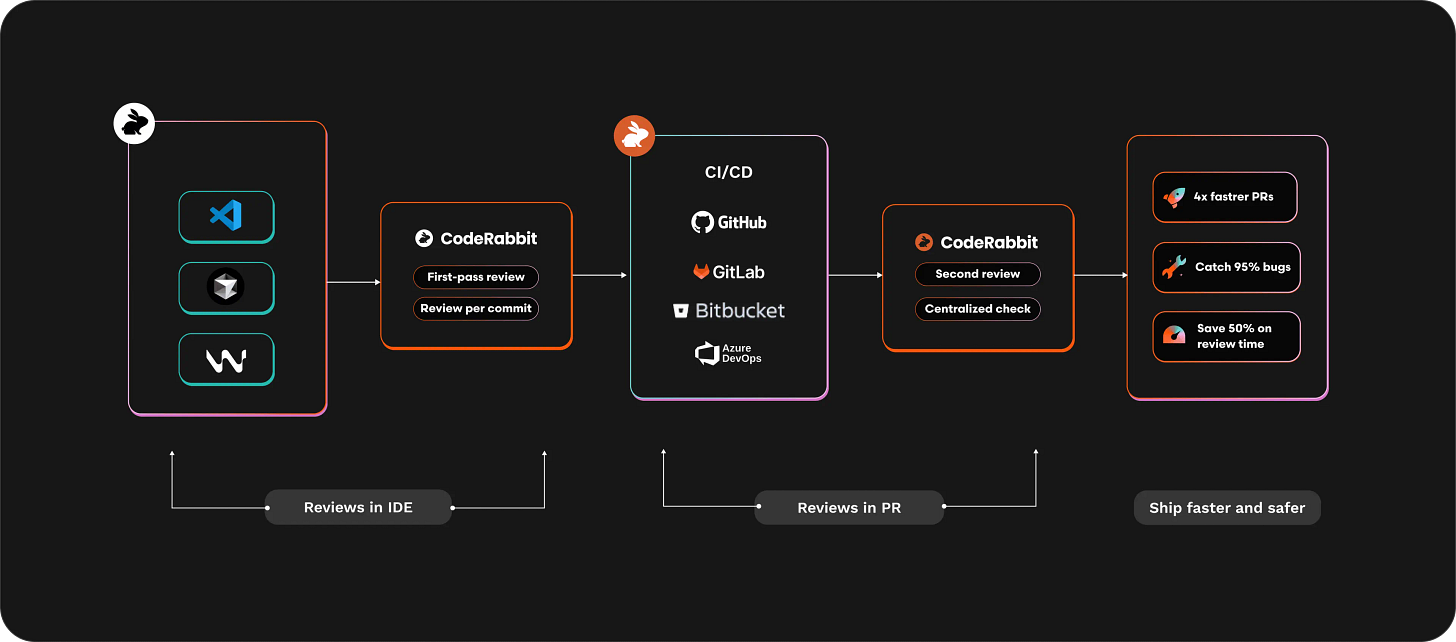

This edition of Generative Programmer is supported by CodeRabbit. Teams using CodeRabbit report 50%+ reduction in code review time. It’s not just automated linting—it understands your codebase, learns your team’s patterns, and provides contextual feedback that actually matters.

Generative AI is changing how developers discover and consume open source software. It’s no longer enough to have a good readme and contributor guide for humans, you also need to make your project welcoming for AI. Large Language Models (LLMs) are now the new distributors and consumers of software. They can read your code, parse your docs, write examples, and even explain your project to developers worldwide. As a maintainer, your project’s visibility and adoption will depend on how well it’s represented in these AI systems.

In this post, I’ll share a practical guide on how to make your open source project easier for LLMs to understand, and for humans to use through AI tools.

1. Make Your Project Discoverable by AI

When developers face a problem, or research a topic, they don’t go any longer to Stack Overflow or Google first — they ask an LLM through ChatGPT, Claude, or a similar service. Questions such as “How to tune Kafka for low-latency?” or “What’s the best open-source MCP implementation for Kubernetes?” are now answered directly by LLMs.

When a developer is in the zone within their IDE and faces a common issue, like a Python dependency error: “cannot import module x”, they ask their AI assistant inside the IDE how to fix it.

Modern AI systems combine their training data with live web search, scanning documentation, repos, and blogs to generate the best up-to-date responses. But most project sites are designed for people, not machines, they are full of menus, ads, and nested pages. Critical details like installation flags, version notes, or API parameters are often buried deep and hard for AI crawlers to find. As a result, your project may be misunderstood or overlooked even if it’s well-known.

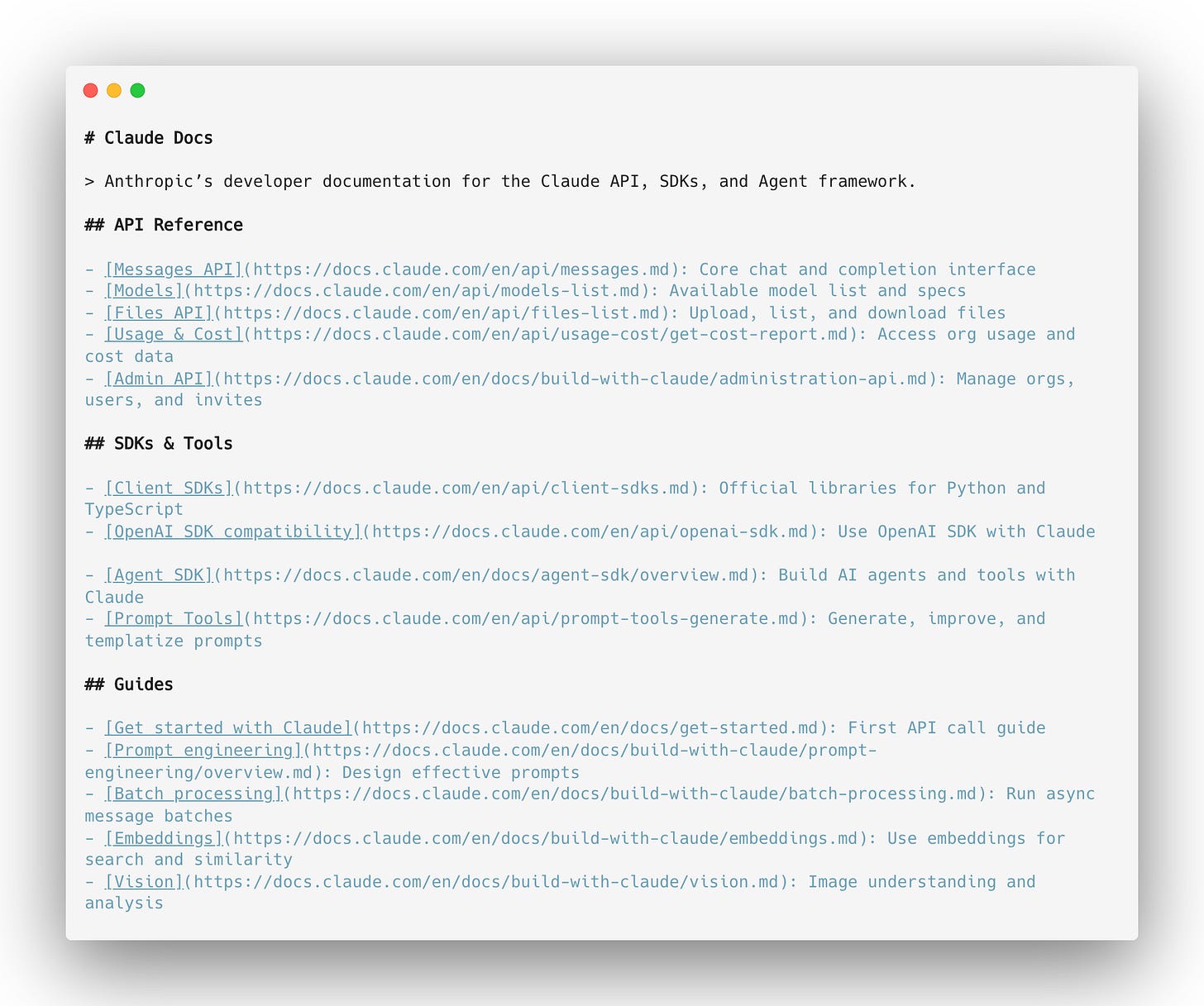

Using llms.txt to Help AI Find the Right Docs

llms.txt is a simple Markdown file placed at the root of your project’s site that gives AI models a clean, structured map of your most relevant documentation. Think of it as a robots.txt for language models, but instead of blocking crawlers, it guides them to the right content. It lists a short summary, key links (docs, APIs, SDKs, examples), and optional secondary sections.

Add /llms.txt to the root of the project website, keep it updated alongside your docs, and every AI-powered search or assistant will instantly understand what your project is and where to look next. The file makes your project easier to find, quote, and recommend in AI-driven tools like ChatGPT, Copilot, and Sourcegraph. There are hundreds of websites already using with it: Supabase, DuckDB, Turso, Hugging Face, Langfuse, and many more in various directories.

llms.txt is an easy addition that can give visibility to your project in a world where LLMs are becoming the primary way of discovering and consuming information. As shown by the tracking site llmstxt.ryanhoward.dev, it’s not yet widely adopted, but the fact that Anthropic themselves use llms.txt and recommend it as part of agentic documentation is a strong signal that it’s the right direction to follow.

There are plenty of tools and plugins that can help you create and validate these files easily, such as llms_txt2ctx, vitepress-plugin-llms, and docusaurus-plugin-llms. To learn, check out llmstxt.org.

2. Onboard AI Agents to Your Project

Open source projects today communicate their community policies and expectations through a variety of simple text files, for example, README.md, INSTALL.md, CONTRIBUTING.md, CODE_OF_CONDUCT.md, DEVELOPMENT.md, and ISSUE_TEMPLATE.md. These files explain how to set up the project, contribute, follow coding guidelines, or report issues. This structure has worked well for years when humans were the primary readers.

In the meantime, the number of developers using AI‑enabled IDEs and CLI tools is growing rapidly. Millions of developers rely on Cursor (now surpassing a million users), Claude Code CLI, and OpenAI Codex daily. Yet these users are underserved, their IDEs can’t easily interpret the project’s policies, build steps, contribution rules and community best practices. As a result, contributing through AI‑assisted tools often leads to manual onboarding, lower‑quality pull requests, and contributor-maintainer tension.

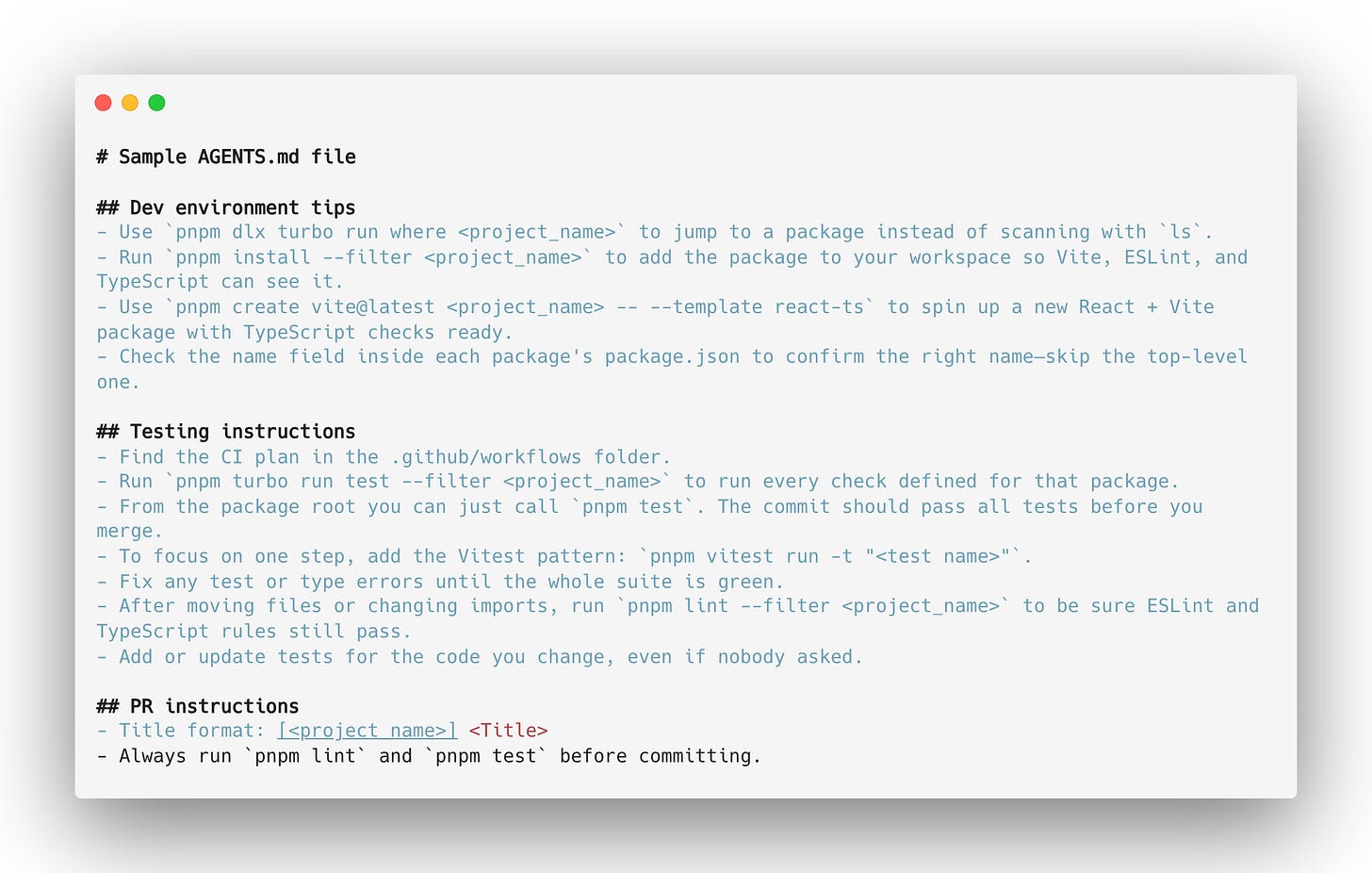

Teaching Coding Assistants Your Project’s Way of Working

This is where agent context files come in. The idea started with different IDEs introducing more advanced ways of sharing project context — for example, .cursor/rules files in Cursor, which began as simple rules and later evolved into structured folder hierarchies, or CLAUDE.md, used by Claude CLI to encode build and contribution instructions. These formats help AI-enabled tools understand how to build, test, and navigate a codebase.

As the space grew fragmented with various formats, AGENTS.md led by OpenAI began to emerge as the most widely adopted standard for defining project context. Today, it’s becoming the common denominator across AI-assisted environments, with over 30,000 pull requests on GitHub contributing AGENTS.md files in open-source projects.

AGENTS.md files act as a README for AI agents. They summarize how to build, test, and contribute to your project, covering environment setup, dependency installation, code style, test execution, and commit message rules — but in a way that AI tools can parse and follow. Supported by major IDEs and CLI tools such as Cursor, VS Code, GitHub Copilot, Aider, RooCode, Gemini CLI, Jules, Factory, and Devin, these files help AI agents understand your project’s structure and workflow.

With these files, AI assistants can follow project conventions, and produce contributions that align with human developers’ expectations, reducing friction in collaborative development.

These files are often paired with AI-assisted development guides that show contributors how to configure their IDEs and CLIs for the best experience — such as Angular’s AI development guide and Convex’s guide for Cursor.

In short, AGENTS.md and accompanying IDE setup guides provide the missing layer of context between your code and the growing ecosystem of AI-powered development tools. It’s a lightweight addition that helps AI understand your project’s workflow — and makes your repository ready for the era of AI-assisted coding.

In short, AGENTS.md and accompanying IDE setup guides provide the missing layer of context between your code and the growing ecosystem of AI-powered development tools. It’s not just about onboarding developers anymore — it’s also about onboarding AI agents. This file acts as an onboarding guide for agents, helping them understand your project’s structure, style, and expectations, so they can contribute effectively and make every developer more productive from day one.

3. Define Your AI Contribution Policy

AI coding tools are now part of everyday development, but most open‑source projects still lack clear policies on how and when they can be used. Without guidance, contributors interpret “acceptable AI use” differently, leading to inconsistent pull requests, hidden AI‑generated code, and extra work for maintainers who must assess both quality and authenticity.

Setting Ground Rules for AI‑Assisted Contributions

Projects like Ghostty, Gramps, Servo, and Scientific Python illustrate different approaches, from allowing AI with disclosure, to banning it outright. These examples show that there’s no universal answer, but various efforts to help avoid tension and maintain trust. A great reference comes from the Open Infrastructure Foundation’s policy on AI‑generated content:

“As a contributor, you are responsible for the code you submit, whether you use AI tools or write it yourself. Some AI tools offer settings, features, or modes that can help, but these are no substitute for your own review of code quality, correctness, style, security, and licensing.”

It’s worth emphasizing that every pull request is still reviewed and maintained by humans, not machines. Contributors must ensure that AI‑assisted code meets the same quality, readability, and consistency expectations as any manually written code.

There’s also a broader movement around structured, transparent AI collaboration, such as the Vibe Coding Manifesto. It advocates for integrating AI into open source responsibly, not rejecting it, but managing it through clear PR rules, contributor transparency, and supporting documents like PROMPTING.md. The manifesto also outlines practical steps such as creating small, focused PRs, running self‑reviews and tests before submission, adding clear AI attributions, and limiting concurrent PRs to avoid “PR spam.” These concrete recommendations help maintain quality while keeping the review workload manageable.

Explicit AI contribution guidelines help maintain a healthy balance between speed and quality. Whether your project allows, restricts, or just tracks AI use, defining the rules early prevents confusion and keeps your contributor community aligned.

4. Scale Code Reviews with AI

Once your project becomes more discoverable and contributor‑friendly with clear guidelines, agents onboarded, you’ll start seeing more AI-assisted activity and more pull requests. Reviewing those PRs becomes one of the primary jobs of maintainers. Some PRs might come from bots or low‑quality AI output and can be easily rejected, but many will vary significantly in quality and completeness. The only scalable way to keep up with this volume of AI‑assisted contributions and maintain quality is to use AI for reviewing, too!

Bringing AI into the Review Process

AI can perform first‑pass reviews, freeing human maintainers to focus on architecture, design, and logic rather than repetitive or stylistic feedback. Such tools act as automated reviewers that integrate directly with GitHub. They can:

Issue Triage & Labeling – Categorize issues automatically

Documentation Sync – Ensure code and docs remain aligned

External Contributor Reviews – Apply stricter checks for new contributors

Custom Review Checklists – Enforce team standards and consistency

Path‑Specific Reviews – Trigger deeper analysis on critical file changes

Scheduled Maintenance – Automate repository health checks

Security‑Focused Reviews – Detect vulnerabilities with OWASP‑aligned checks

Among the most popular AI tools for code reviews are:

CodeRabbit – the most widely adopted open‑source‑friendly AI code review tool.

CodeRabbit integrates directly with GitHub and offers free plans for open‑source projects. It supports both IDE‑based inline reviews and PR‑based multi‑file reviews, giving teams flexibility for internal and external contributions. Its context‑aware reviews understand file dependencies and coding patterns, and it can review uncommitted changes directly in the IDE before a PR is even created, reducing friction and catching issues early.

Claude Code Action – integrates Anthropic’s Claude for PR review and comment generation, providing natural‑language insights and feedback.

OpenAI Codex Cloud Review – runs as a GitHub Action to automate AI‑based feedback on pull requests and highlight potential issues.

As AI assistants generate more code, the number of incoming PRs will rise. Having AI review these PRs first ensures consistent quality without overloading maintainers. The workflow becomes: AI writes → AI reviews → humans approve. That loop keeps velocity high while maintaining the standards as expected.

5. Make Your APIs Agent-Ready

If your project already exposes an API, CLI, or has a client SDK, it’s time to think about how AI agents can interact with it directly. Developers are no longer the only consumers of APIs, AI assistants are becoming first‑class users, too. Yet most APIs today are not agent‑ready: assistants can read about them but not interact in a structured, safe way.

Opening Your APIs to AI Agents with MCP

The Model Context Protocol (MCP) is an emerging open standard that lets AI agents connect to tools, APIs, and data sources. Exposing your API or CLI via MCP makes your project agent‑ready, enabling AI systems to query, test, and trigger actions contextually instead of copy‑pasting examples. It improves discoverability, automation, and supports AI‑native workflows where agents use your project the same way as developers do. It also connects your project to the growing MCP ecosystem of compatible tools, browsers, and registries.

Projects like Apache Kafka, Turso, DuckDB, and Redis are early examples of being agent‑ready. Some already expose MCP endpoints that let assistants query data or perform operations directly. Cloud services such as Confluent Cloud, Supabase have gone further, hosting full MCP servers that make their APIs seamlessly accessible to AI tools.

To get started, tools like FastMCP can automatically generate MCP servers from any OpenAPI specification, instantly turning your existing API into one that AI models can use through MCP. Over time, you can evolve toward a purpose‑built MCP server. Platforms such as Speakeasy also provide MCP server generators with free plans for open‑source projects, making it easy to experiment.

In short, if your project has an API, offering MCP‑based access is becoming table stakes. Adding MCP support transforms your project from something AI agents can only read about into something they can use directly, unlocking the next phase of open‑source and AI integration.

6. Turn Docs into Conversational Interfaces

Developers don’t have the time to dig through endless documentation pages or wait for answers in Discord. They expect instant help, the same experience they are used to from AI tools. Yet, most open‑source documentation is static and unresponsive to real‑time questions, and even outdated as the code changes faster.

How Conversational Docs Improve Developer Onboarding

Adding an AI‑powered chat interface to your docs lets users ask questions directly, based on your project’s documentation, issues, and source code. Tools like Kapa.ai, Inkeep, CrawlChat can index your site, GitHub repo, or forums and deliver answers instantly inside your website, Slack, or Discord. Such services improve onboarding, reduce repeated support requests, and help users learn faster through natural conversation.

If these tools are not affordable for your project, you can also build a simple in‑house alternative like CrewAI’s “Chat with the Docs”, or index your documentation with Context7 and expose it as an MCP server, allowing users to query your docs directly from their IDE.

This is an evolving space, but it’s likely that in the future, all open‑source projects will have built‑in AI chat interfaces, offered for free, either sponsored by their open‑source foundations or integrated directly into platforms like GitHub. For now, you have to find ways to cover this cost.

7. Automate Community Tasks with AI

As open-source communities grow and AI-assisted development accelerates, maintainers face a constant flow of new issues, PRs, and support questions. Repetitive tasks, triaging, labeling, answering common questions, or closing stale tickets, can consume hours that could be spent improving the project itself.

Let AI Handle the Repetitive Work

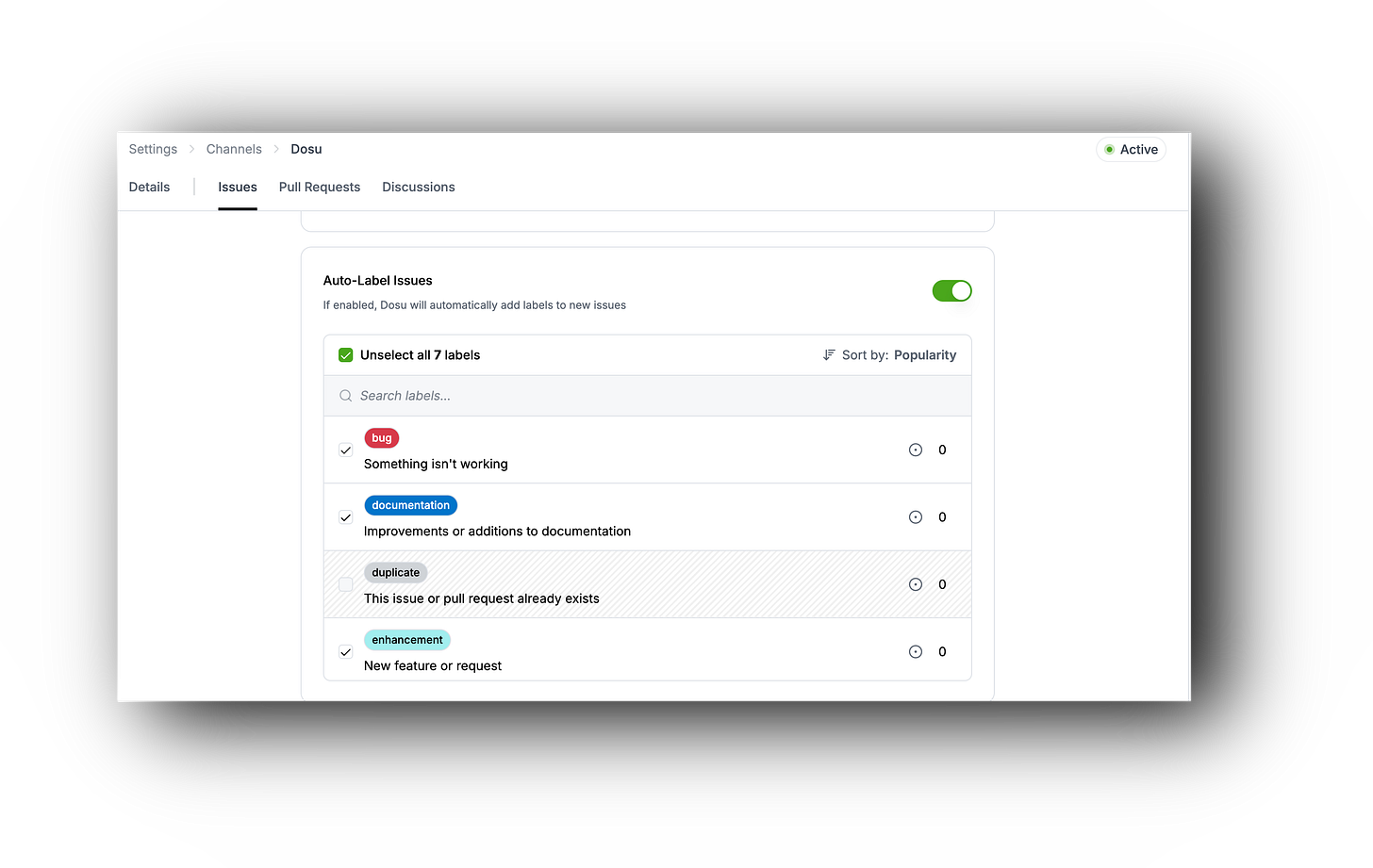

AI maintainers like Dosu automate many of these repetitive tasks. They can triage issues, answer contributor questions, close inactive discussions, remind authors about missing documentation, and enforce contribution guidelines — all while keeping conversations active and polite. Dosu already works with major projects like LangChain, Prisma, Strapi, Apache Airflow, and several CNCF initiatives, and is free for open-source use (with CNCF and ASF partnerships in place).

In short, AI maintainers act as 24x7 project maintainers, handling the administrative overhead so human maintainers can focus on technical leadership, community growth, and long-term vision.

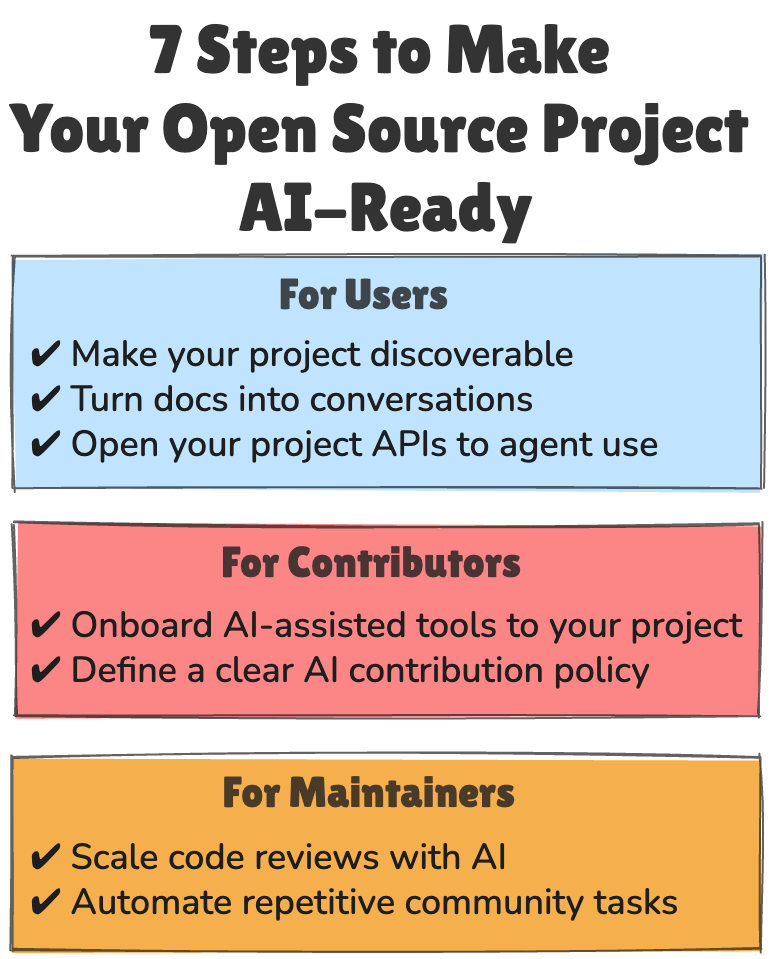

AI-Ready Project Checklist for Maintainers

Every open-source project has its own culture, workflows, and pace of change. Yet as AI rapidly reshapes how developers build and collaborate, standing still is the worst option. The right move is to adapt early and use AI as an advantage, not a disruption. This checklist helps maintainers identify where to start.

For Users

Make your project discoverable: Create

llms.txtlisting key docs, APIs, and examples so LLMs can index your project. This is a top-of-funnel lever, but with still-unproven impact; treat it as a follow-on step after the other core items.Turn docs into conversations: Deploy a tool like Kapa.ai trained on your documentation and community resources to answer user questions instantly. This is no longer a luxury: users now expect conversational help, and it significantly improves new user experience.

Open your project APIs to agent use: If you have a CLI, SDK, or API, create an MCP server so AI agents can call and use it directly. Comprehensive MCP support makes your project easier to integrate with AI tools and stand out among alternatives.

For Contributors

Onboard AI-assisted tools to your project: Add

AGENTS.mdwith build, test, and style instructions so AI tools can quickly figure out how to work with your code. Thousands of projects already use it, making it one of the easiest and highest-impact improvements.Define a clear AI contribution policy: Update

CONTRIBUTING.mdand PR templates with a reminder that contributors are responsible for submission quality and correctness, regardless of which tools they use. This reinforces human accountability while promoting transparency as AI-assisted contributions grow.

For Maintainers

Scale code reviews with AI: Connect CodeRabbit or a similar asynchronous AI bot to automatically review pull requests and provide feedback for free on open-source projects. A small setup effort here pays back quickly by reducing reviewer fatigue and catching early issues.

Automate repetitive community tasks: Install Dosu or a similar GitHub bot to triage issues, answer questions, and keep discussions organized. Automation beyond code reviews helps sustain healthy collaboration and saves maintainers valuable time.

Together, these practices ensure your project is set up to benefit from AI at every level: users can discover it, get answers instantly, and use it with their AI tools; contributors have clear onboarding and AI usage guidelines; and maintainers gain automation for reviews and community operations. The space is evolving fast, keep an eye on relevant tools and practices, subscribe, and share how you’re using AI in open source.